Novartis Pharma AG commissioned FundamentalVR to create the SIRIUSVR immersive learning experience, which scales the foregrounding of procedural knowledge and skills acquisition needed to deliver a groundbreaking gene therapy, and reduces its reliance on cadaver-based training.

My Roles

Interaction and UX Designer

Instructional Designer

Product Owner

My Deliverables

User journey and personas

Interaction design

Instructional copy

My Tools

Figma

Illustrator

Unity

Supplanting Conventional Training (and Saving the Rabbits)

For Novartis Pharma AG, training ophthalmology surgeons in animal wet-labs was expensive and inefficient. The company needed to teach as many experts as possible how to deliver an innovative gene therapy in time for its introduction to the market, and this could not be achieved with the limited means of such large facilities. My team’s challenge was to provide a simpler, more economical solution for skills acquisition using emerging technology, and allow Novartis’ reliance on wet labs to be reduced.

The Solution

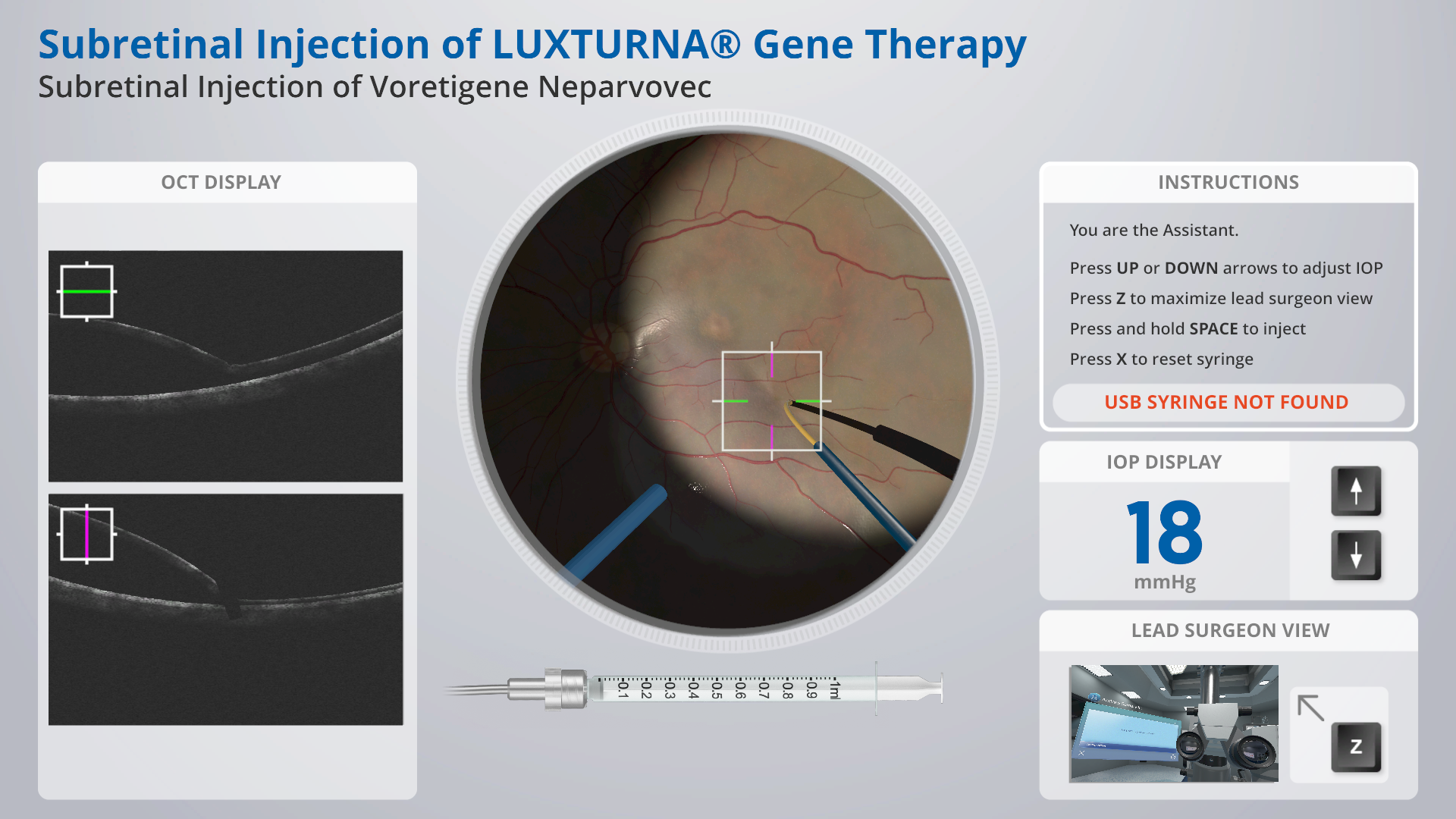

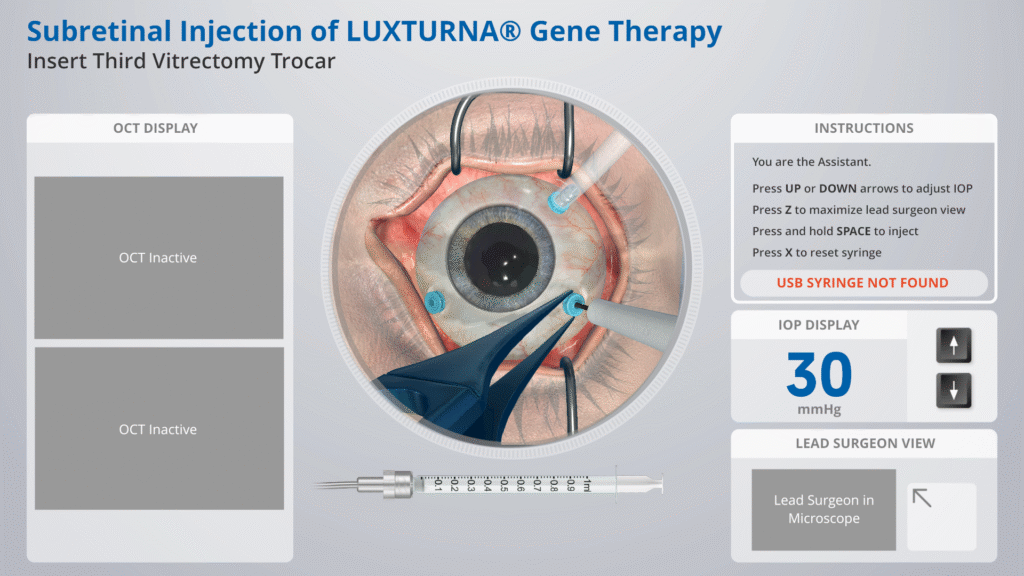

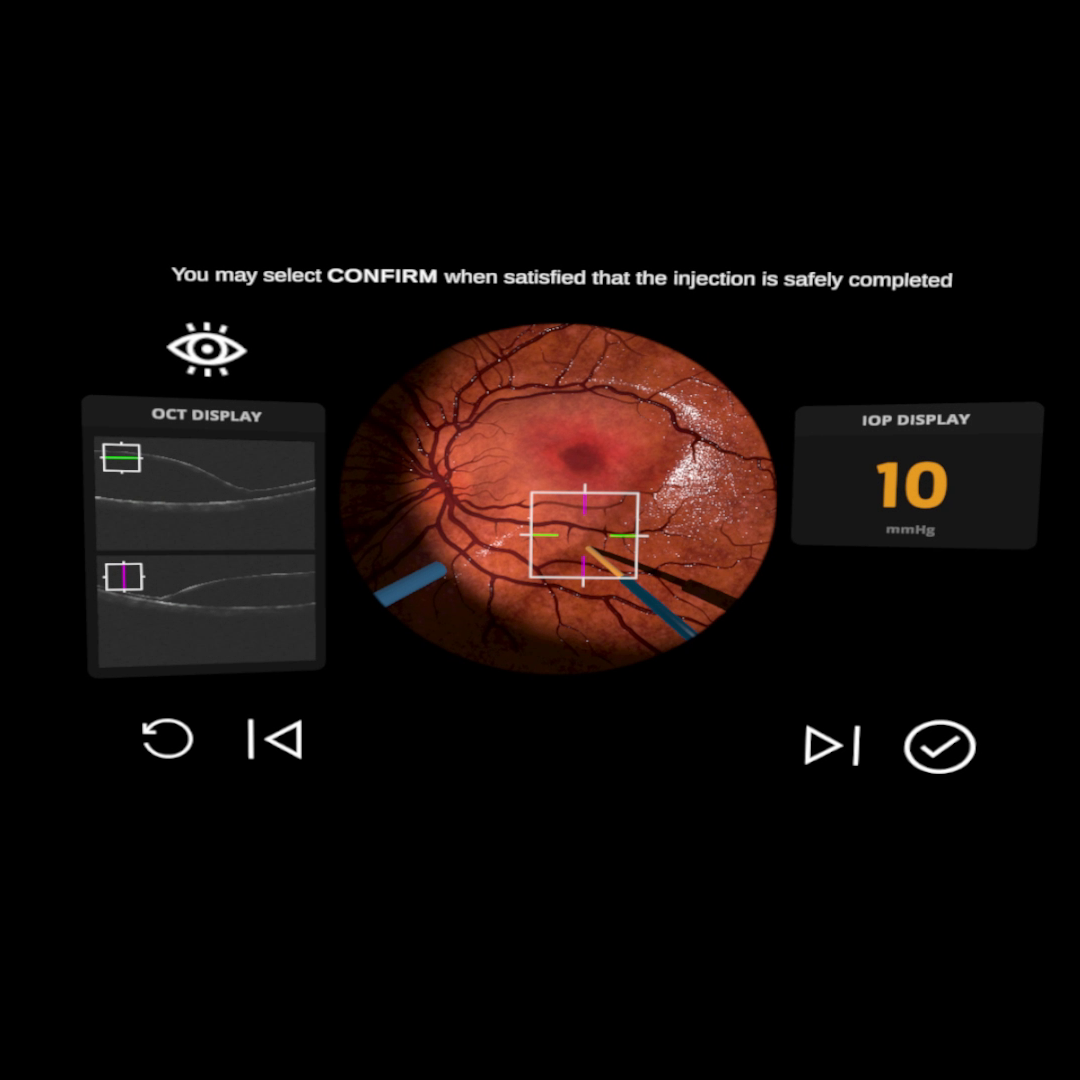

We launched a multi-modal experience simulating the procedure for sub-retinal injections guided by Optical Coherence Tomography (OCT). As in the real-life surgery, two users perform in the simulation: the ‘primary’ user inhabits the VR space to target the retina with an injection cannula, and the ‘assistant’ user, interfacing via flatscreen, keyboard and hardware syringe peripheral, deploys the drug.

Teaching, Not Training

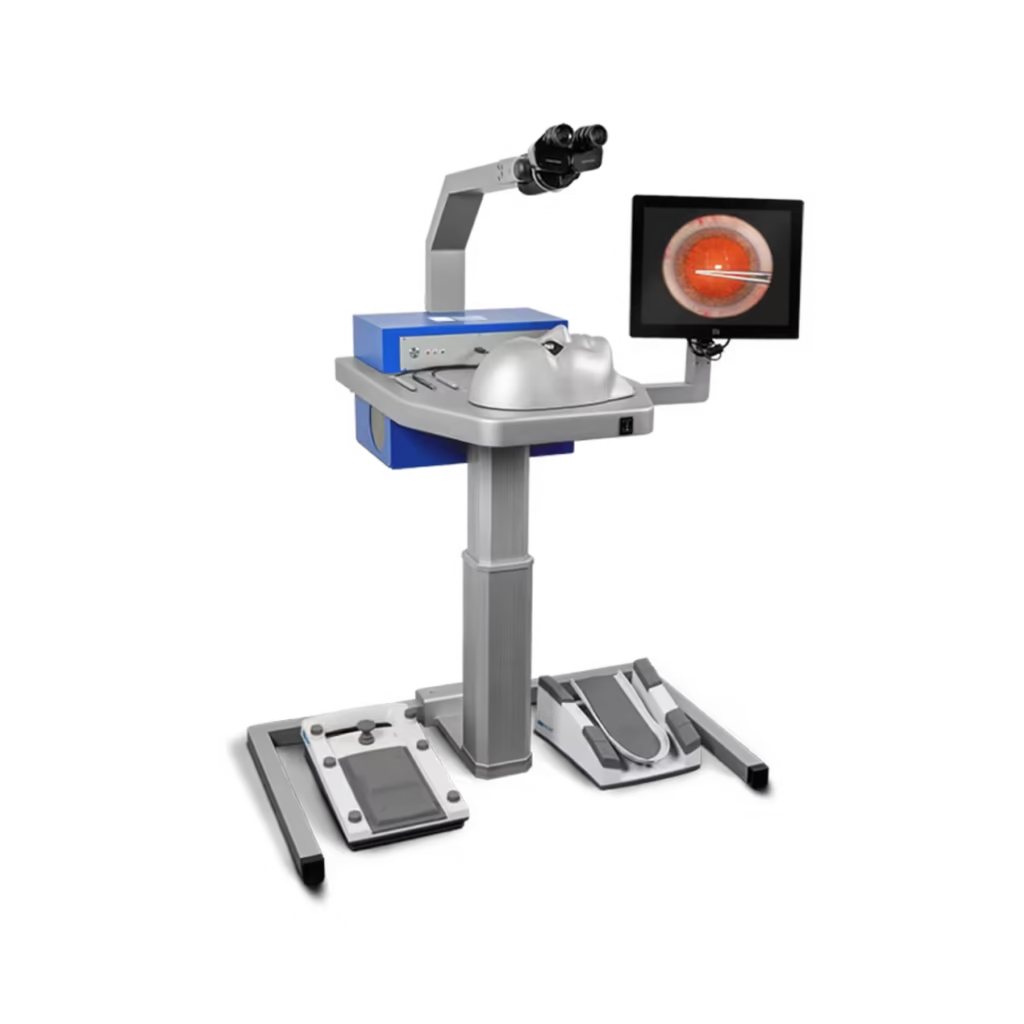

While designed to communicate high-fidelity kinaesthetic (haptic) interactions suitable for training surgeons, alternative surgical simulations are expensive, and employ large, cumbersome hardware that is difficult to deploy at scale. The Eyesi Surgical simulator is one such example. These were not a great deal more economical than the wet labs Novartis wanted to move away from. Portable haptic interfaces together with virtual-reality technology seemed to be the ideal compromise. However, project stakeholders understood that VR was too nascent and unproven a platform to be certified for skills-validation in the highly-regulated domain of medicine.

The Eyesi Surgical simulator runs into the tens of thousands of dollars, and lacks features required of the procedure that Novartis developed, namely accommodations for the assistant who deploys the syringe.

(Image © 2025 Haag-Streit)

Our solution could therefore not be used to certify clinicians to administer Luxturna. It was important for myself and the UI designer on the project to keep this in mind for the overall vision. I led an exercise where we formulated design principles that would keep us true to this understanding:

- We are not training users: they already know how to perform surgery

- It is the protocol they do not know; the product is an instructional manual

- Avoid suggestions that the simulation is a validation tool;

- But, use an engaging, accurate experience to provide convincing context and impart trustworthiness

Even if users were required to perform surgical techniques to advance in the procedure, I did not assume to judge experts on elementary surgical performance when writing the user journey and instructional copy. Instructions and feedback were presented as matter of fact, like the kind we’re used to following in satellite navigation. Carrying out the procedure would be like driving along a new route. A satnav will simply reroute you if you miss a turn, maintaining objectivity: Feedback on adverse actions (like pushing on the retina too hard) was treated similarly: the user would simply be alerted to deviations from the accepted guidance in a non-judgemental way using passive, observation-based language (“there is too much pressure on the retina” vs “you are pushing too hard on the retina”).

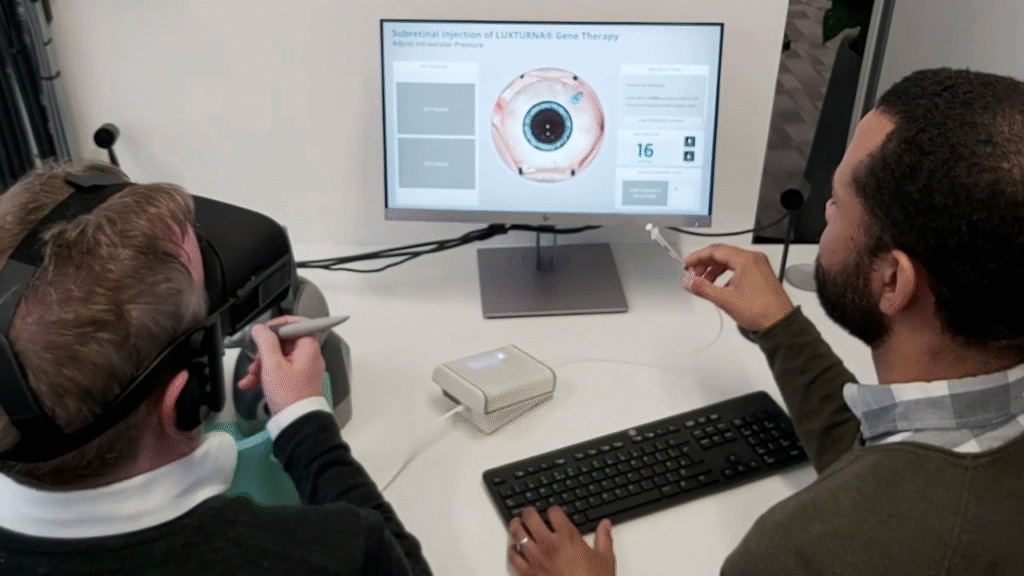

An early version of the application, shown with all components in the set up.

A Delicate Matter

Three surgeons committed to consulting with us. One of them happened to be Novartis’ key opinion leader (KOL) for the project, which meant we had regular access to him. The other two were hard to pin down for extra-curricular activities (since they were preoccupied with caring for patients). We worked to three-week sprints, so I suggested to the team that we could pencil each surgeon in for a meeting every successive week, with our most dependable stakeholder invited in every third week ahead of sprint reviews. This ensured that we still had the expert coverage we needed even if the remaining surgeons could not attend, and could guarantee a regular stream of feedback to inform plans for the next sprint.

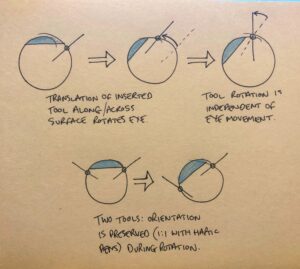

We had much to prove where emulating the delicate forces of interaction with human anatomy was concerned. I prioritised the development of small prototypes of key interactions (eye manipulation, needle placement) while I worked on the overall user journey in parallel. They were tested by the surgeons on site.

We had to prove that we could convince surgeons used to a high-standard of physical simulation. How we could do this with bulky haptic pens standing in place of small and thin surgical instruments was the subject of much prototyping and iteration. As the interaction designer, I had to overcome the exacerbating lack of rotational force feedback in the Geomagic Touch pens.

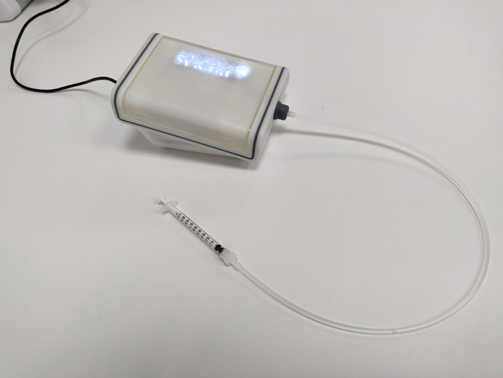

The Syringe Interface

The novel interface was constructed using an off-the-shelf syringe and hydraulics. Coordinating the work to calibrate, interface with and design a dual-user experience around the syringe device—designed and manufactured by an external party according to our requirements—was a constant challenge due to varied technical pitfalls. Perhaps the most significant of these was air ingress, which would lead to drift in potentiometer input and subsequent misalignment of the digital and analogue readouts of the syringe’s plunge depth after shipping.

Early prototyping of our syringe peripheral gave us a high degree of confidence that we could accommodate the assistant user’s activity well, but it was difficult to maintain.

We tried to mitigate this issue in two ways: first, I devised a simplified maintenance protocol that allowed users to recalibrate the device, which printed instructions included with the product package; second, I prioritised development of a small Unity app that would allow users to calibrate the digital readout of the syringe against the primary signifier—the graduated markings—on the syringe itself. This was suggested by an engineer on the team, who knew this would be a low-cost but high-value addition, given that the method for reading values from the syringe was already written for the main procedure.

Results

The simulation was judged favourably in four key domains by Subject Matter Experts: 1) ergonomics and haptics, 2) comparison with traditional animal wet labs, 3) additional features not available with traditional wet labs e.g. ‘hyper-training’ and 4) overall assessment.

During this training, I felt like I was really in an operating room. In addition, I was able to look through a microscope and perform the operation with instruments that I normally also use during an operation. This training ensures that my colleagues and I are as well prepared as possible for the first retinal operations with gene therapy that we will be performing soon.

Koen van overdam, retinal surgeon

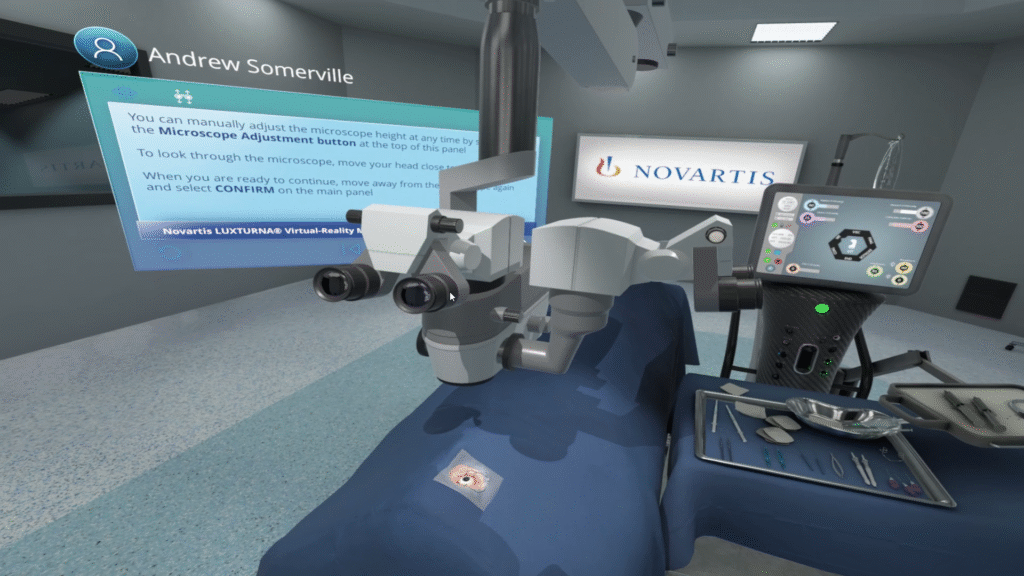

The virtual OR occupied by the primary user.

The microscope seen in the picture is interactive, allowing the user in VR to look through it for appropriate visualisation of the patient’s eye.

Novartis wanted to be seen as an early proponent of VR training to set themselves apart from their competitors. For this purpose, I and other stakeholders entered the paper “Virtual reality haptic surgical simulation for sub-retinal administration of an ocular gene therapy” (Investigative Ophthalmology & Visual Science Vol. 61, Issue 7, June 2020) into the Association for Research in Vision and Ophthalmology’s (ARVO) annual meeting.

The convoluted hardware setup and frail syringe interface remained unwieldy, however. Novartis saw value in the experience but was nervous about the deployment and maintenance of the overall solution. Senior stakeholders and I realised that there was an opportunity to run the deployment ourselves, and we conceived the Novartis Concierge Service as a result.

As of 2023, there were 30 deployments to 22 countries in 5 continents, with over 2,000 patients treated as a direct result of SIRIUSVR training. We did not know this at the time of development, but the COVID pandemic would be a surprising boon for the adoption of this service, as social-distancing and restricted travel were just the sort of limitations this VR solution could naturally overcome.

Case Study: SIRIUS VR

All images and trademarks © 2025 Novartis Pharma AG, unless otherwise stated. All rights reserved.